ChatGPT Remembers Everything About You—But Is This a Good Thing?

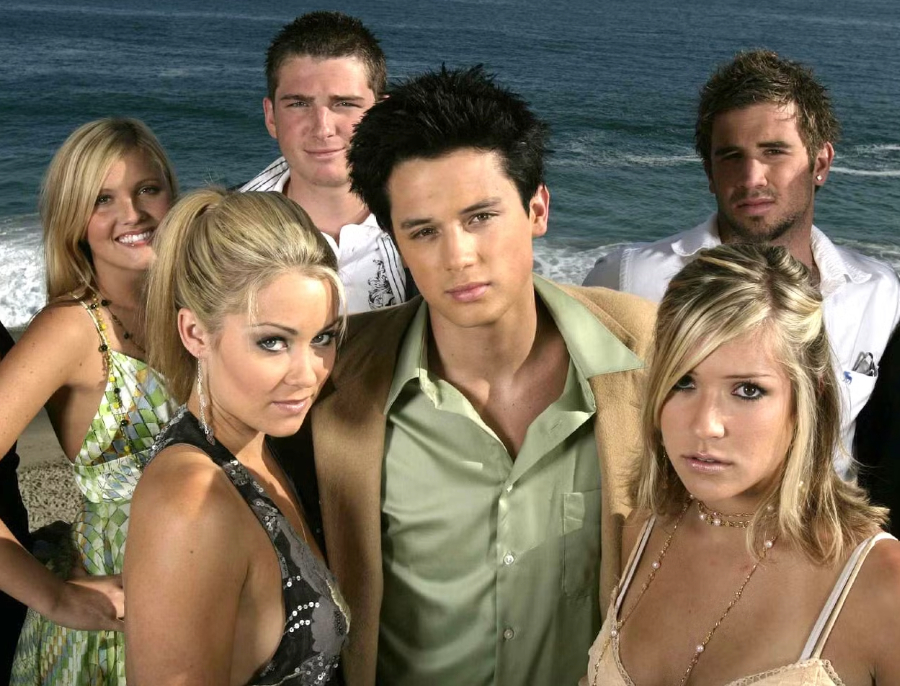

The gut-punch Black Mirror episode, “Be Right Back,” from 2013 imagines a type of ChatGPT AI that consumes a lifetime of texts, posts, and videos to rebuild a dead boyfriend. At first, the replica feels comforting, yet the more perfectly it recalls every detail, the more painfully it exposes what real people are: contradictory, impatient, and sometimes selfish.

That chilling thought sits at the heart of today’s debate over ChatGPT, because a tool that never forgets can be a brilliant assistant or a relentless archivist, depending on who is holding the receipts.

Martha’s (Hayley Atwell) android boyfriend (Domhall Gleeson) proves that endless memory isn’t automatically loving. The same question hovers over ChatGPT: Does total recall empower users or trap them in a data loop they can’t escape? The Intersect with Cory Corrine dives straight into that tension. Corrine opens with a reality check, noting that “ChatGPT remembers everything about you, which is part of what makes it powerful.” But power, of course, cuts both ways.

ChatGPT goes mainstream and Gen Z leans into ‘data nihilism‘

“A colleague of mine on the show noted that on the train last week, every single person that she saw had their laptop open to ChatGPT,” Corrine noted of her recent conversation. Tech and culture journalist Taylor Lorenz, one of The Intersect’s two guests, observes a new resignation among young users, calling it “data nihilism.” Today’s teens and Gen Zers offer a collective shrug, demurring, “What power do I have?” because they’re assuming their information is already scattered across the web.

Yet that shrug can mask real risks. “We’re left with no choice but to proceed, but proceed with caution,” Corrine states.

AI: the risks and rewards

Privacy scholar Dr. Jen King, another guest on The Intersect, reminds listeners that defaults matter. “Those chats are being, for the most part, collected by default and being used to retrain these systems,” she says. So, unless users toggle settings, every prompt becomes training fuel. King is a privacy and data policy fellow at the Stanford University Institute for Human-Centered Artificial Intelligence.

“I personally use a service called DeleteMe to remove my data from the web,” Lorenz says. King counters with a bigger-picture warning, noting, “The value proposition right now for most of these [AI] tools is really oversold.”

When Corrine asks whether the conveniences outweigh the costs, Lorenz doesn’t slam the brakes. “Try it out, see if it’s useful for something,” she suggests. “But just go into it in a mindful way.”

King echoes that vibe, stressing that limitless personalization invites manipulation. She flags looming “AI agents and just this increased desire to make personalized experiences for everybody,” suggesting that a chatbot that recommends a fire extinguisher might quietly steer users toward the brand that paid for placement.

How to keep your data—and sanity—intact

Proceeding with caution doesn’t mean ditching technology. It means tweaking habits so ChatGPT serves you rather than surveils you.

- Check the settings first. Turn off chat history if the platform allows; King notes that many systems collect data by default.

- Limit what you share. Lorenz highlights how users “might be actually disclosing a lot more data than they realize” during casual prompts. Sensitive health or financial details deserve an offline notebook, not an AI text box.

- Scrub what’s already out there. Services like DeleteMe help, and so does an old-fashioned Google sweep of your name.

- Advocate for better laws. Lorenz urges listeners to “focus on data privacy and advocate for it” because systemic rules protect everyone.

- Remember the off switch. King keeps location tracking disabled because constant pings “paint a portrait of what you’re doing and everywhere you go.”

ChatGPT Memory is a black mirror, so use it wisely

Black Mirror warned that perfect recall can feel inhuman, and Corrine’s panel shows the warning is no longer fiction. ChatGPT can draft résumés, brainstorm trip itineraries, or teach quadratic equations at lightning speed. It can also stockpile heartbreak diaries and late-night confessions for uses that no one has yet imagined. The most intelligent move isn’t panic; it’s mindfulness.

Leave a Reply